High-energy physicists working at the Compact Muon Solenoid (CMS) detector at the Large Hadron Collider (LHC) at Cern in Switzerland are benefiting from a NoSQL database management system that gives them unified access to data from a slew of sources.

Valentin Kuznetsov, a research associate and computer specialist at Cornell University, is a member of a team providing data management to the CMS Cern project. It built a system using MongoDB in preference to relational database technologies and other non-relational options.

"We considered a number of different options, including file-based and in-memory caches, as well as key-value databases, but ultimately decided that a document-oriented database would best suit our needs," he says. "After evaluating several applications, we chose MongoDB due to its support of dynamic queries, full indexes, including inner objects and embedded arrays, as well as auto-sharding."

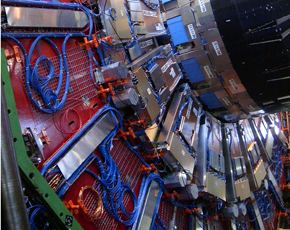

The CMS (pictured) is one of two particle physics detectors at Cern. It collects data from the LHC experiment that reproduces the big bang that kick-started the universe, designed to gain an understanding of how matter and force particles get their mass. More than 3,000 physicists from 183 institutions representing 38 countries are involved in the design, construction and maintenance of the CMS experiments.

Data management on a big scale

Cornell is one of many institutions worldwide that contribute to the LHC experiments at Cern. Kuznetsov, a physicist and software engineer who has worked at Cern in the past, is involved in the data management group at Cern.

He says some five years ago the data management group at the CMS confronted a data discovery problem, with a variety of databases necessitating a user interface that would hide the complexity of the underlying architecture from the physicists. It wanted to build a Google-like interface, but one that would return precise answers to queries whose form could not be determined in advance.

"We had a variety of distributed databases and different formats – HTML, XML, JSON files, and so on. And then there is the security dimension. The complexity was, and is, huge", he says.

The team discovered the world of NoSQL databases and, within that, the document-oriented approach seemed the best fit, with MongoDB being favoured because it lent itself well to the end product being an interface that could be queried in free text form.

MongoDB is one of a sub-genre of document store NoSQL databases, like Apache's CouchDB.

Barry Devlin, a data warehousing expert who blogs on TechTarget's B-Eye Network, explains what is meant by a document-oriented approach: "Documents? If you're confused, you are not alone. In this context, we don't mean textual documents used by humans, but rather use the word in a programming sense as a collection of data items stored together for convenience and ease of processing, generally without a pre-defined schema."

Read more about the IT at Cern In search of the Big Bang 10 FAQs about Cern How Cern leads the way in database innovation How a JMS-based SOA monitors the Cern particle accelerators Cern turns on new NetApp ONTAP clustered NAS capability Ten petabytes of data per year

The CMS spans more than 100 datacentres in a three-tier model and generates around 10PB of data each year in real data, simulated data and metadata. This information is stored and retrieved from relational and non-relational data sources, such as relational databases, document-oriented databases, blogs, wikis, file systems and customised applications.

To provide the ability to search and aggregate information across this complex data landscape CMS's Data Management and Workflow Management (DMWM) project created a data aggregation system (DAS), built on MongoDB.

The DAS provides a layer on top of the existing data sources that allows researchers and other staff to query data via free text-based queries, and then aggregates the results from across distributed providers, while preserving their integrity, security policy and data formats. The DAS then represents that data in defined format.

All DAS queries can be expressed in a free text-based form, either as a set of keywords or key-value pairs, where a pair can represent a condition. Users can query the system using a simple, SQL-like language, which is then transformed into the MongoDB query syntax, which is itself a JSON (JavaScript Object Notation) record, said Kuznetsov.

"Due to the schema-less nature of the underlying MongoDB back end we are able to store DAS records of any arbitrary structure, regardless of whether it's a dictionary, lists, key-value pairs, and so on. Therefore, every DAS key has a set of attributes describing its JSON structure," says Kuznetsov.

Kuznetsov says it is now clear that "there was nothing specific to the system related to our experiment". The approach is extensible.

At Cornell, other groups, including in ornithology, are facing similar problems and have expressed interest. He stresses that "analytics should be the main ingredient. The system should learn from what is being asked and be able to answer more questions in a free way.

"The beauty of MongoDB," he says, "is that the query language is built into the system," unlike, for example, Couch, also in use at Cern, where users need to code for each query.

"DAS is used 24 hours a day, seven days a week, by CMS physicists, data operators and data managers at research facilities around the world. The average query may resolve into thousands of documents, each a few kilobytes in size. [We get a] throughput of around 6,000 documents a second for raw cache population," concludes Kuznetsov.

The Cern physicists are at work unlocking the mysteries of the universe, and NoSQL technology is, whether they know that or not, helping them do it.

Image courtesy of Arpad Horvath